Original paper: https://arxiv.org/abs/1907.05600

This is a recreation of the original paper using PyTorch.

Original paper code can be found here.

This is a homework for the Deep Learning course at National Research University Higher School of Economics, Moscow Russia.

The work has been done by Petr Zhizhin ([email protected]) and Dayana Savostyanova ([email protected]).

The final report about the recreation is available in Russian language. You can read it in the repository here.

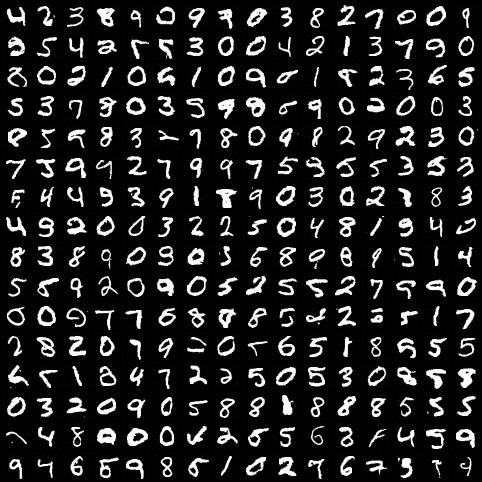

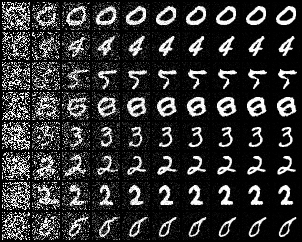

The model on MNIST was trained for 200k iterations on a Tesla V100 for straight ~48 hours.

|

|

|---|---|

| MNIST generated samples | MNIST generation process |

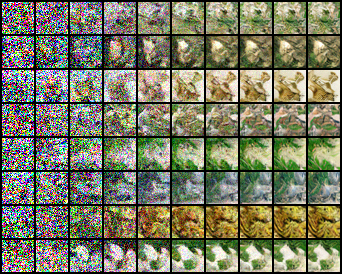

The model on CIFAR was trained on 150k iterations on a Tesla V100 for ~32 hours. The model is underfitted, we were out of budget to train further.

|

|

|---|---|

| CIFAR generated samples | CIFAR generation process |

The model can be trained and evaluated on two datasets: MNIST and CIFAR-10.

usage: langevin_images.py [-h] [--dataset DATASET]

[--dataset_folder DATASET_FOLDER] [--mode MODE]

[--n_generate N_GENERATE] [--download_dataset]

[--sigma_start SIGMA_START] [--sigma_end SIGMA_END]

[--num_sigmas NUM_SIGMAS] [--batch_size BATCH_SIZE]

[--model_path [MODEL_PATH]] [--log [LOG]]

[--save_every SAVE_EVERY] [--show_every SHOW_EVERY]

[--n_epochs N_EPOCHS]

[--show_grid_size SHOW_GRID_SIZE]

[--image_dim IMAGE_DIM]

[--n_processes [N_PROCESSES]]

[--target_device [TARGET_DEVICE]]

optional arguments:

-h, --help show this help message and exit

--dataset DATASET

--dataset_folder DATASET_FOLDER

--mode MODE

--n_generate N_GENERATE

--download_dataset

--sigma_start SIGMA_START

--sigma_end SIGMA_END

--num_sigmas NUM_SIGMAS

--batch_size BATCH_SIZE

--model_path [MODEL_PATH]

--log [LOG]

--save_every SAVE_EVERY

--show_every SHOW_EVERY

--n_epochs N_EPOCHS

--show_grid_size SHOW_GRID_SIZE

--image_dim IMAGE_DIM

--n_processes [N_PROCESSES]

--target_device [TARGET_DEVICE]You can look at the default arguments in langevin_images.py file.

By default, it trains on the MNIST dataset for 1 epoch on a CPU.

To download MNIST dataset and then train on it using a GPU, while making a checkpoint every 10 epochs and generating sample images every 5 epochs on Tensorboard:

python3 langevin_images.py --dataset mnist --save_every 10 --show_every 5 --n_epochs 500 --target_device cuda --download_datasetIt will create a Tensorboard run under runs folder. To monitor training, you can run:

tensorboard --logdir runsThe dataset will be downloaded to a folder named dataset (controlled by --dataset argument). The checkpoints will be available under langevin_model path (controlled by --model_path argument).

After trainining, you can generate samples using the following command. It will create .png images under langevin_model/generated_images.

python3 langevin_images.py --dataset mnist --mode evaluate --n_generate 100If you want to create a 16x16 images grid (as in the example above), you can run this command:

python3 langevin_images.py --dataset mnist --mode generate_images_grid --show_grid_size 16If you want to create an image with the annealing process (as in the example above), you can run:

python3 langevin_images.py --dataset mnist --mode generation_process --n_generate 8You can get the last checkpoints here:

| Dataset | Link |

|---|---|

| MNIST | 000451.pth |

| CIFAR | 000231.pth |

After you downloaded the checkpoints, put them in your checkpoints folder (langevin_model) by default. You also need to create an empty .valid file with the same name as your checkpoints. It tells the program that the checkpoint is written fully and is safe to be used.

The contributions are always welcome. We would highly appreciate the following contributions:

- If you have resources to train the CIFAR model, please provide a better checkpoint. The model should converge to a better result.

- If you want to do a code cleanup, this would be highly appreciated.